Russian doll caching - lightning fast rendering

I spent a hackday with looking at implementing a russian doll based html cache for CMS. I think the result was so interesting so I thought I should share it. I will describe russian doll caching more later but in short the idea is to cache chunks of already rendered html. It is a fairly long post but I hope you will find it worth reading, it offers a huge performance boost!

A specific usecase I had in mind was large sites where the rendering is dependent on a lot of content items. One such example could be a commerce site where the start page could contain several product listings and menus which require a lot of content items to be rendered. Such sites is very dependent on the content cache to perform well. That means that when a new instance of the application is started (for example due to autoscale in Azure) it must first load up alot of content items in cache before it perform well.

Russian Doll caching

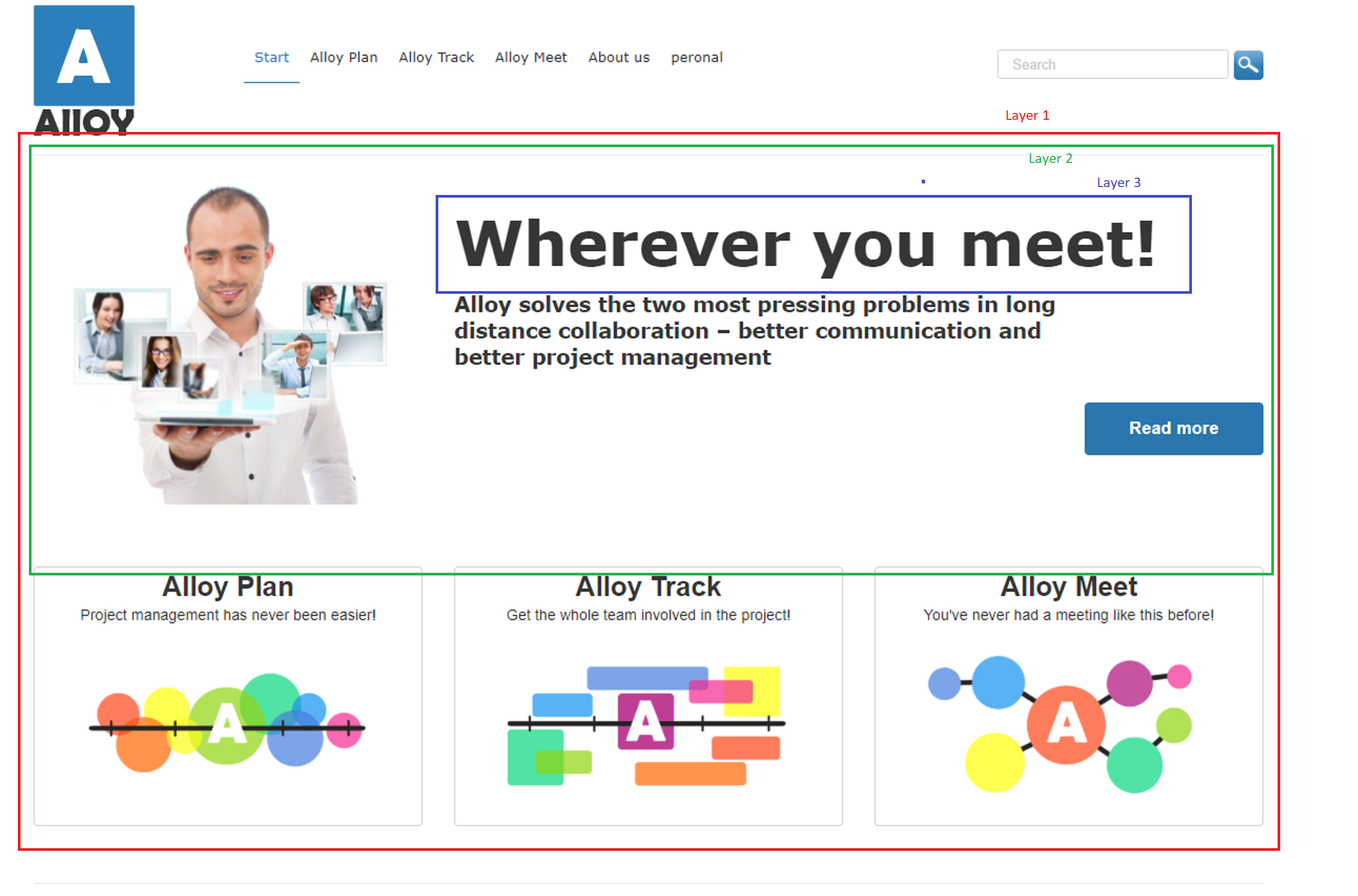

The idea with russian doll caching is to cache items in several layers where each layer has a dependency on next layer, it is used for example in Ruby on Rails. As an example we can look at the Content area rendered in picture below. The output of the content area as a whole will be cached at layer 1. The output of each item in the content area will then be cached at layer 2 and then individual properties for a content item will be cached in layer 3.

There will be quite alot of redundance with meaning a specific html element might be cached several times in different layers however there are some good benefits with this. The first is that since layers are dependent on next layer then cache evictions can be propagated quite nicely. If we take the content area as an example then if a content item in an area is changed then the html for that specific item is evicted and then that is propagated to next layer meaning the html for the content area as a whole is evicted as well. The next nice thing with this caching teqnique is if we take the content area again as example. When one item is changed the output for that item and the content area as whole gets evicted however the output for all other items in the content area are still valid. That means that the next time the area is to be rendered it only needs to render that specific item and then put together that output with the other already cached items to render the area as whole.

Distributed cache

Altough it is possible to use this caching technique with an in-process cache I think it is much better suited for an out of process distributed cache. One reason is that the caching will likely contain quite alot of large html strings that will fill up the memory but also for the usecase above. In that case when a new instance of the site is spinned up it can serve html chuncks fetched from cache without having to fill up the object cache with alot of content items. Probably those large sites could run with much less RAM as well due to that.

So I have looked at Redis for this. One common way to use Redis is to use it as a distributed object cache. The usecase above could probably be helped with a distributed content cache. In my case I however use Redis as a cache for Html. Then the cost for serializing/deserializing can be avoided and it also makes it possible to cache at a much higher level than with a distributed object cache.

IHtmlCache

I have created an interface IHtmlCache that can be used to get/add html to the cache. The interface is registered in the IOC container. The interface has a single method like:

string GetOrAdd(string key, Func<IRenderingContext, string> renderingCallback);The way to use the method is to first create a cache key that should be unique for the context (colud be built from e.g. current content, current property and current template). Then a call is maid against the method GetOrAdd. If the item is already cached you get the html result back otherwise the renderingCallback Func is called where you specify how to render the html. Then the result is cached and returned. The argument IRenderingContext that gets passed to the callback makes it possible to for example add content dependencies. The current IRenderingContext can also be retrieved from IOC container using IRenderingContextResolver. This can be useful if you have for example a listing block that is dependent on some listing, then you can add a dependecy to that listing in for example the block controller (see PageListBlockController in my Alloy project).

PropertyFor and Content rendering

To inject the html cache in the CMS rendering I choosed to intercept PropertyRenderer, ContentAreaRenderer and IContentRenderer. The interceptors basically checks if the html output for the item to be rendered is already cached. If so the html is returned else the default renderer is called and the output is captured and cached. During the rendering it also creates dependencies to the content item(s) that the output is dependent on. That is so the right html elements gets evicted for example when a content item is published.

Personalization

This caching technique is somewhat related to output cache, but in that case is the whole html for a page cached as whole. One problem is that is hard to know which content item that affected the rendering and hence is the whole output cache evicted (for all pages) when some content is published. Also output cache is hard to use with personalization since it is all or nothing that gets cached. With russian doll caching it was possible to handle personalization much nicer. Say for example that a page contains a content area or a xhtmlstring that is personalized. Then we can avoid to cache that specific property but still cache the html for other items on that page such as menus, listings and other contentareas or xhtmlstrings.

Alloy modifications

I have uploaded a somewhat modified Alloy site together with the code for the html cache to my git hub repository. I have added a Block type NowBlock which can be used to verify that personalization of ContentArea and XhtmlString works as expected. I have also added an action filter that writes out the rendering time for request in the bottom left of the page. There are also a noop and an in-process implemenation of IDistributedCache that can be registered by selecting which IDistributedCache implementation that is registered in Alloy/HtmlCache/DistributedCacheConfigurationModule.cs. That can be used to compare the rendering time for inprocess-cache, redis cache and no html cache (it still uses the content cache in this case).

Menu

I have changed the Menu in Alloy/Helpers/HtmlHelpers.cs so it uses the html cache. You can see in the code that it also caches at several layers, first the menu as whole but also individual menu items. Meaning if one item would be evicted then that item and the menu as whole needs to get re-rendered but the html output from all other items remains and can be reused to render the menu as whole.

Footer

On Alloy all pages uses the same footer that has some page listings. However those page listing is not dependent on the current rendered page, instead those are defined on start page. Therefore has the PropertyFor calls in Footer.cshtml been modified to the pass in which content it is dependent on(the default behaviour is current rendered content).

Async

Since calls to Redis is out of process calls I initially thought it would make sense to use the async overloads of the methods. However my impression was that the sync calls where much more stable and also since the MVC 5 APIs I interact with is synchronus I choosed the sync methods. However my impression is that Redis is lightning fast so I have not seen any problems with it. But if this where to be implemented for ASP.NET Core I would probably look at the async alternatives again.

Performance

I measure the rendering tims by adding an action filter (defined in Alloy/HtmlCache/RenderTimeFilter.cs), it starts a stopwatch in OnActionExecuting and stops it at OnResultExecuted. So the measurement is the time for serverside rendering (it does not include the time for network transfer). The actual figures are not that interesting since it dependens on your machine etc, but the comparsion between the different options are insteresting. Also running Alloy locally on the machine is fast anyway so it can be hard to see/feel the difference.

When comparing the results for page "About us" that does not have any personalizaton, but a top and left menu and some listings in footer and some content properties.

No html cache ~20-25 ms

Redis ~ 5-8 ms

In-Memory 3-5 ms

When testing on News&Events which has a PageListBlock instance that prevents caching I get result as:

No Html cache ~25-30ms

Redis ~15-18 ms

In-memory ~12-15 ms

In this case the page list block prevents caching of the content area. Also the page list block is rendered through a partial controller so the effect is not as significant for this page (the same applies for pages Alloy Plan, Alloy Track and Alloy Meet which all has PageListBlock instances).

So the result is that when no personalization is used the improvment is massive! And when there is personalization we also see a significant improvment even though it is not equally impressive (especially when the non cached item is a block rendered through a partial controller). Another thing to notice is that using Redis as a distributed cache is not that much less performant than an in-memory cache.

Thougths

In my implementation I went "All-In" meaning it caches a whole lot for example the output all properties. It would be possible to adjust it to just cache some specific property types (e.g. ContentArea and XhtmlString) or to avoid caching property output at all. A suggestion could be to profile the application to see what gives most benefit (menus and listings are good candidates) and start with that. Especially if you cache in-process you might want to limit what to cache. However I strongly suggest a distributed cache, that also addresses the cold start scenario.

Give it a spin

The code can be cloned from my git hub repository. It includes an Alloy site that you can run to test the code on, should be just to press F5. I have not tested it on any larger site but as I see it those sites should be the ones that has most benefit of this (especially if you use the distributed cache). To run the Redis implementation you need to install Redis locally or on some other machine. You could create it in Azure as well but that is probably a bit unfair (when you compare with e.g. in-process) unless you deploy the application to Azure as well.

Disclamiers

This is nothing offically supported by EPiServer, you are free to use it as you like at your own risk.

It is currently not in production quality, for example are there no tests or fault handling.

It would be nice to see a comparison against this and the 2 common inbuilt caching mechanisms of

We currently use the second approach and was looking at moving to the standard recommended ContentOutputCache which works load balanced and on the DXC, the main issues are having to extend this for supporting visitor groups and the inability to handle donut caching scenarios beyond that of block publish changes.

It would probably be possible to add an additional layer which would be the whole page (like ordinary output caching) by writing an Action filter that checks if something is already cached, and if so writes the output to the response directly. This would have the benefit from ordinary output caching in that it can have dependencies to the actual content it depends on, meaning an individual content publish does not need to evict all items from cache (as with ordinary output cache).

I will see if I can add such a filter.

Great, I think as mentioned what I'd be keen on is metrics on how the different caching implementations perform. As each implementation works different it's always a useful factor to know about.

I have now updated the code with a HtmlActionFilter that will add a "top" layer which is the whole page output (like when using output cache). It can be enabled by outcommenting registration line in Alloy/HtmlCache/DistributedCacheConfigurationModule.cs .

Note however that it will make the measurement that I added to response useless, since the result is part of the cached result...

Regarding the comparison of the different output caches. Usage of OutputCacheAttribute in Mvc is differently for "ordinary" requests and Child action requests. The first is using the ordinary output cache system in aspnet while child actions are ouput cached in a memory cached. However it is not possible to evict items from that in-memory cache. That is the reason the ContentOutputCacheAttribute is not available for child actions.

Regarding ordinary output caching in aspnet, the performance be different there depending on which provider you use (FileSytem based, Redis). A custom output cache provider like Redis will however not support eviction through content changes, see https://world.episerver.com/forum/legacy-forums/Episerver-CMS-6-CTP-2/Thread-Container/2010/11/Custom-outputcache-provider-with-ASPNET-4/

Previously I disabled caching for PageListBlock (due to that it does some searching which make it hard to determine dependecies). I have now however added a property IRenderingContext.DependentOnAllContent so that a complex component like PageListBlock can be cached but where it gets evicted whenever any content item is published (like how ordinary output cache works).

The result after the change for page News&Events (as above) which has a PageListBlock I get result as:

No Html cache ~25-30ms

Redis ~5-8 ms

In-memory ~ 4-6ms

Thats more what we like!

I also tested on Alloy Track which has a personalized MainBody (XhtmlString), in that case I get result as:

In-memory 5-8 ms

Redis 6-10 ms

No html cache ~25-30 ms

Now this is also more what we like, we see that a single personalized xhtml string is not that costly. Meaning it is not the personalization as such that was the expensive part but instead the usage of a partial controller (that where not cachable).

Cool! Something that will move in to the Epi product?

Oh! I like it!

Nice work, will look more in depth when I have the chance :-)

It might end up in the product in some form or another at some time. The usage is though probably very different (what and how much to cache) depending on if there is a distributed cache (like Redis) available. So in that case I think there should be some opt-in registration model where the partner easily could setup what to cache.